Science-fiction writers have explored artificial intelligence (AI) takeover scenarios for decades. But the current state of AI is actually quite limited. Machine-based “intelligence” is good at playing Go and chess, beating humans in some cases. Just don’t expect it to take over the world anytime soon.

Of course, future AI capabilities could be miles beyond their current state. There may come a tipping point years from now (a “singularity,” as it’s known), where AI technology and sophistication advances by leaps and bounds. But for now, that’s the stuff of science fiction.

Still, it’s fun to speculate, isn’t it? To imagine what that future might look like? To hypothesize about how AI could take over the world?

From a fiction writing standpoint, it represents a blank slate. The AI of the future could differ greatly from what we have today. This gives science-fiction writers latitude to devise their own ideas for AI takeover scenarios. The sci-fi writer can take current ideas and technologies and project them forward, creating something new but plausible.

After all, that’s what science fiction does. It speculates. It creates a future vision derived from current realities.

But let’s get back to world domination, shall we?

How Could AI Take Over the World?

How could AI take over the world? What would an AI-controlled world look like? And how would we, as humans, fit into that new model?

There are no right or wrong answers to these questions, because we simply don’t know. But we can hypothesize different scenarios based on where AI is today, and where it could go in the future.

Let me introduce you to Max Tegmark (if you don’t already know him). Mr. Tegmark is a professor in the Physics Department at MIT. His research attempts to bridge the gap between where we are now, and where we might be in the future.

He’s also co-founder of the Future of Life Institute, a research and outreach organization that works to reduce existential threats to humanity, particularly those involving AI technology.

In 2018, Tegmark published a book entitled Life 3.0: Being Human in the Age of Artificial Intelligence.

As a writer of speculative fiction, I was intrigued by the so-called “aftermath scenarios” outlined in chapter whatever. But you don’t have to be a science-fiction writer to get something out of Tegmark’s book. Anyone who has ever wondered how AI could take over the world will probably enjoy reading it.

The 12 Aftermath Scenarios

At one point, the book explains scenarios in which AI could either serve humanity or take over the world. They cover a broad spectrum, ranging from utopian scenarios where humans and AI coexist in harmony, to dystopic visions of human enslavement and annihilation.

Granted, we’re not talking about an inescapable fate here. These are just possible scenarios for the future of humanity, based on how AI is developed and how it evolves over time.

You can find a “cheatsheet” of these aftermath scenarios on the Future of Life website. Below, I’ve summarized them in my own words.

| LIBERTARIAN UTOPIA | This is the best-case scenario. Instead of AI taking over the world, it coexists peacefully alongside humans. Think of a human and cyborg chorus joining hands to sing Kumbaya. |

|---|---|

| BENEVOLENT DICTATOR | Compared to the utopia above, this is a bit of a demotion for humans. Here, a powerful and complex AI runs society and imposes rules on humans. But most humans are okay with it. |

| EGALITARIAN UTOPIA | Sort of a futuristic socialism, this is where humans and cyborgs coexist peacefully due to the elimination of personal property and a guaranteed income. Welcome, comrades! |

| GATEKEEPER | The “gatekeeper” scenario makes me think of a sheriff from the Old West. It stays out of people’s business, as much as possible. Its primary role is to prevent another superintelligence from rising up and possibly taking over the world. Helpful robots and human-machine cyborgs exist, but their advancement is “forever stymied” (as Tegmark puts it) by the gatekeeper. |

| PROTECTOR GOD | Here, we have a godlike AI entity that greases the skids for human happiness by letting us feel like we’re in control of our destiny. It operates behind the scenes, so to speak, to the point that some humans doubt its very existence. |

| ENSLAVED GOD | From a storytelling standpoint, I consider the “enslaved god” scenario to be a powder keg waiting to explode. This is where we have a superintelligent AI that’s actually confined by humans. We harness it, using its advanced capabilities to produce technology and wealth for ourselves. |

| CONQUERORS | Buckle up. We’re getting into the AI takeover and world-domination scenarios. Here, artificial intelligence begins to view humans as a threat, a nuisance, or a waste of resources. So it seizes control, eliminating humankind in a way we can only imagine. (Good material for you sci-fi writers.) |

| DESCENDANTS | In this aftermath scenario, artificial intelligence replaces humans as the dominant “lifeform” on the planet. But in a graceful way. A passing of the torch, if you will. We look upon AI the way proud parents would view a super-intelligent child going out into the world. |

| ZOOKEEPER | An all-powerful AI takes over the world. But it doesn’t kill humans off completely. It keeps some of us around (the chosen few), in a captive state similar to zoo animals. |

| 1984 | This aftermath scenario is named for George Orwell’s novel of the same name. It borrows the central idea from that book as well. Here, human authorities create a kind of surveillance state to ban AI research, fearful of its abilities. |

| REVERSION | Put your car in the garage and break out the horse-and-buggy! Humans have seen the terrifying potential of AI and superintelligence, and want nothing to do with it. We adopt anti-tech views, reverting back to a pre-industrial, pre-technological society in the style of the Amish. |

| SELF-DESTRUCTION | I’ll just defer to Tegmark on this one: “Superintelligence is never created because humanity drives itself extinct by other means (say nuclear and/or biotech mayhem fueled by climate crisis).” |

Science Fiction Applications & Ideas

Life 3.0 is a work of non-fiction. But it also gives science-fiction writers a wealth of information and ideas for storytelling purposes. With that in mind, I would recommend this book to any sci-fi writer with an interest in androids, cyborgs, artificial intelligence and the like. It’s a veritable goldmine of AI story ideas.

Take the table above, for example. I can see many science-fiction stories rising from those 12 “aftermath scenarios” alone. There’s some sci-fi horror potential there as well.

Here’s how you could turn some of those concepts into AI story ideas:

- Enslaved God: The idea here is that we use the artificial intelligence for our own purposes. We use its powerful technology to improve our lives and build wealth. But what if it became self-aware? Would it feel enslaved? Or exploited? Would it turn bitter as a result? If it’s aware of its own self, could it also feel superior to us? A science fiction writer could answer these questions as she or he saw fit, through speculation and worldbuilding.

- Conqueror: This AI story idea evolves from the “enslaved god” scenario above. It’s the next chapter in that narrative. Here, the artificial intelligence knows it is superior to us. It’s a superintelligence, after all. We run a distant second. What if the AI envisioned a better world, one without humans? A world where it is self-sufficient, unrestrained, and unchallenged? What if it wanted us out of the picture, for good? Now imagine that same AI has access to financial computers and data, power grids, weapon systems and the like.

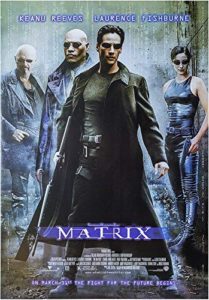

- Zookeeper: In The Matrix movies, intelligent machines use our bodies for energy. But we don’t know it, because we’re trapped within simulated realities. This ties into the “zookeeper” aftermath scenario from the table above. There are many AI story ideas and applications here. Why would the machines / AI keep us around? For entertainment? For energy, as in The Matrix? And how would they “store” us? Could we ever break free? It’s enough to get a sci-fi writer’s wheels turning.

- Reversion: I could see a dystopia arising out of this concept. What if, far into the future, humans realized the dangers of advanced technology and artificial intelligence? What if they outlawed such technologies? What if there was a black market for it? A war between pro-tech factions and Neo-Luddites?

Bottom line: We’re a long way from AI being able to take over the world, and such a thing may never happen. But there’s a lot of room for speculation here.

Related: Creating scary androids and robots in fiction

Scientists and researchers speculate to break new ground and make new discoveries. Science-fiction writers do it for the same reasons, but within the context of a story.